A Founder's No-BS Guide to Multivariate vs. A/B Testing

A Founder's No-BS Guide to Multivariate vs. A/B Testing

So you're trying to figure out if you should run an A/B test or a multivariate test. Let’s cut the crap: the difference is simple.

A/B testing is your sniper rifle. It's for making one big, decisive change.

Multivariate testing is your wide net. It's for catching the best combination of many smaller changes.

An A/B test pits two completely different versions of a page against each other to find a clear winner. Simple. A multivariate test, on the other hand, mixes and matches multiple elements on a single page to discover the most effective combination.

Let's get real about testing

Forget the jargon. As founders, we're all chasing growth, and testing is how we find it. But running the wrong kind of test is one of the fastest ways to burn cash and your team's energy.

The question isn't which method is “better.” It’s about picking the right tool for the job. You wouldn't use a sledgehammer to hang a picture frame, would you? Same logic.

A/B testing is your go-to for big, bold moves. Think about a total overhaul of your pricing page, changing the core value proposition in your hero section, or testing a multi-step vs. single-step checkout. These are radical changes, and you need a clean, binary answer: is this new idea better than the old one? Yes or no. That’s it.

Multivariate Testing (MVT) comes in when you already have a winning concept and want to squeeze every last drop of performance out of it. It’s about optimization, not reinvention. For example, you might test three different headlines, two hero images, and two calls-to-action (CTAs) all at once. This reveals which specific combination of elements works best—an insight a simple A/B test could never give you.

You choose your test based on the scale of your question and the traffic you actually have.

The history here is telling. Companies like Amazon and Google made A/B testing famous in the early 2000s because it was straightforward and delivered quick wins. By 2012, over 70% of Fortune 500 companies were running A/B tests weekly. They saw conversion lifts of 20-30% just by splitting traffic between two variants.

The core mistake I see founders make is using MVT when they should be A/B testing. They try to optimize the color of a button on a page that has a fundamentally broken value prop. Fix the big problems first with A/B tests.

To see how testing fits into the bigger picture, the guide on Website Conversion Rate Optimisation: A Practical Guide for Growth-Focused Teams offers great context. These tools are just one part of a much larger growth engine.

Ultimately, picking the right test comes down to strategic clarity, not just technical execution.

When to use A/B testing for big wins

Let's cut to it: for 80-90% of the tests you'll ever run as a startup or scale-up, A/B testing is your best friend. It’s clean, powerful, and gives you straightforward answers to big questions. Don't overcomplicate things.

This is your tool for testing radical redesigns, brand-new value propositions, or a completely different pricing model. We’re talking about major, single-variable changes where the goal is a huge impact, not some tiny 1% lift. This is about swinging for the fences.

Trying to figure out if a long-form landing page crushes a short, punchy one? A/B test. Debating whether a chatbot converts better than a static lead form? A/B test. Simple, direct, fast.

Perfect for startups and lower-traffic sites

One of the most practical reasons to stick with A/B testing is traffic. Most early-stage companies simply don't have the visitor volume to run a complex multivariate test. It’s a hard truth, but you have to accept it.

An A/B test needs far less traffic because you’re only splitting your audience between two, maybe three, versions. This means you can hit statistical significance—the point where you can actually trust your results—way faster. You get your answer in days or weeks, not months.

The biggest mistake I see is teams with 20,000 monthly visitors trying to run an 8-variation multivariate test. It's a complete waste of time. Your data will be garbage, and you'll end up making decisions based on noise. Stick to A/B testing until you have massive scale.

This focus on simplicity is critical. In fact, 2023 industry benchmarks show that implementation complexity and result interpretation favor A/B testing in 75% of scenarios purely due to its clarity. A simple test swapping ‘Buy Now’ for ‘Add to Cart’ gets to a winner 65% faster, with some platforms showing results in just a few days and boasting a 92% ease-of-analysis score versus MVT's 45%.

Real-world scenarios for big impact

So, where does A/B testing really shine? It’s all about making bold, focused changes.

Here are a few scenarios where A/B testing is the obvious choice:

- Testing Core Value Propositions: Your headline and sub-headline are the most important words on your site. Pitting a benefits-focused headline ("Save 10 Hours a Week") against a feature-focused one ("Advanced Automation Engine") is a classic, high-impact A/B test.

- Radical Page Redesigns: When you're convinced a page's entire layout is broken, don't just tweak a button color. Build a completely new version and test it against the original. This is how you find 10x improvements, not 10% ones.

- Pricing and Offer Changes: Testing "€49/month" vs. "€599/year (Save 20%)" is a perfect A/B test. It's a single, massive variable that hits user psychology and revenue head-on.

I remember once we changed a single headline on a signup page. The original was clever and packed with jargon. The challenger was brutally simple and spoke directly to the user's biggest pain point. The result? A 40% increase in sign-ups in just two weeks. That's the power of a clear A/B test.

Improving your landing page relevance is key to these kinds of wins; you can check out our guide on how this lowers CPC and boosts your return. For more concrete examples, this practical guide to split testing landing pages offers some great insights into achieving similar improvements.

Bottom line: Use A/B testing when you have a strong, singular hypothesis and need a clear yes-or-no answer. It's the fastest path from idea to validated learning.

Using multivariate testing to understand interactions

If A/B testing is your sniper rifle, then multivariate testing (MVT) is your sophisticated intelligence network. It's more complex, yes, but the insights are on another level. Let's be blunt: MVT isn't for everyone. It’s definitely not for early-stage companies with low traffic. Trying to run an MVT without the right scale is just a dumb way to burn cash and time.

You turn to MVT after you've already won the big battles with A/B testing and now need to fine-tune the machine. It answers a fundamentally different question. It’s not about ‘Which page is better?’ but rather, ‘Which combination of elements creates the best experience?’

Imagine you're testing two headlines, two hero images, and two CTAs. An A/B test would force you to run multiple tests back-to-back, which is slow and messy. MVT, on the other hand, creates every possible combination—in this case, 2 x 2 x 2 = 8 variations—and tests them all at the same time.

Uncovering the synergy between elements

The real magic of MVT is revealing interaction effects. That's just a fancy term for how different elements on your page work together—or against each other. Sometimes, a killer headline only performs well when paired with a specific image. That’s an interaction you would completely miss with sequential A/B tests.

Simple A/B tests might tell you Headline A is a winner and Image B is a winner. But what if Headline A combined with Image B actually tanks your conversion rate? MVT is the only way to see that. It stops you from making optimization decisions in a vacuum.

This is exactly how giants like Netflix perfect their user experience. They don’t just A/B test one movie poster against another. They run multivariate tests on background images, title fonts, and character showcases to find the one combination that compels you to click ‘Play’.

Strategic use cases for MVT

MVT is a precision tool, not a blunt instrument. You don’t use it to test wild, unproven ideas; you use it to refine a concept that’s already working. Here’s when it actually makes sense to deploy it:

- Optimizing high-traffic landing pages: Got a landing page that already converts well and sees hundreds of thousands of visitors? MVT is perfect for squeezing out every last drop of performance. You can test combinations of headlines, form fields, social proof, and button copy. A great toolset makes this smoother; for instance, a robust landing page creator can help you quickly generate the variants you need.

- Refining key funnel steps: Your checkout flow or user onboarding sequence is mission-critical. Once you have a solid flow, use MVT to test different labels, progress indicators, and help text combinations to reduce friction and cut drop-off rates.

- Fine-tuning ad creatives: For paid search or social campaigns, MVT can uncover the most potent mix of ad copy, imagery, and calls-to-action to maximize your ROAS.

The real power of MVT isn’t just finding a single winner. It's understanding the contribution of each individual element to your conversion goal. It tells you why something works, which is an insight you can apply across your entire business.

The elephant in the room: the traffic requirement

Now for the hard truth. The biggest drawback of multivariate testing is the absolutely insane traffic requirement. Because you’re splitting your audience across many combinations (often 8, 12, or even more), each variation gets only a tiny slice of your total traffic.

If your page gets 10,000 visitors a month and you run a test with 8 combinations, each version only gets 1,250 visitors. That’s almost certainly not enough to reach statistical significance. You’ll be staring at inconclusive data for months, which is a death sentence for a fast-moving company.

As a general rule, don't even think about MVT unless you have hundreds of thousands of monthly visitors to the specific page you want to test. Otherwise, stick with the sniper rifle of A/B testing. It’s smarter, faster, and will give you actionable data you can actually trust.

The hard truth about traffic and statistical significance

This is where most founders get it completely wrong, so listen up. Running any kind of test without enough traffic is like trying to predict a national election by asking three people in a coffee shop. The result isn't just wrong; it’s dangerously misleading.

Let’s talk numbers. Imagine your landing page gets 10,000 visitors a month. In a simple A/B test, each of your two versions gets a respectable 5,000 visitors. That’s often enough to get a clear, trustworthy signal in a reasonable amount of time. You can make a decision and move on.

Now, let's try a multivariate test on that same page. You want to test two headlines, two images, and two CTAs. That’s 2x2x2 = 8 combinations. All of a sudden, your 10,000 visitors are sliced so thin that each variation only gets 1,250 visitors. The statistical noise will completely drown out any real effect. You'll be staring at a spreadsheet of meaningless data, wondering why nothing won.

The reality of reaching statistical significance

Let’s be brutally honest: achieving statistical significance, typically at a 95% confidence level, is non-negotiable. Anything less, and you're just guessing. This is where the traffic requirements for MVT become a massive barrier for most businesses.

Real-world stats show A/B testing often needs 50-80% less traffic to get a significant result. Consider a page with 10,000 weekly visitors: a simple A/B test can often reach 95% confidence in one to two weeks. An MVT with just 8 combinations would need 6-8 weeks to reach the same confidence—and that's if it can reach it at all.

Most experts agree you need at least 300-500 conversions per variation to get reliable data, a number that's simply out of reach for most MVT setups unless you have massive scale. You can find more detail on these traffic requirements on metadata.io.

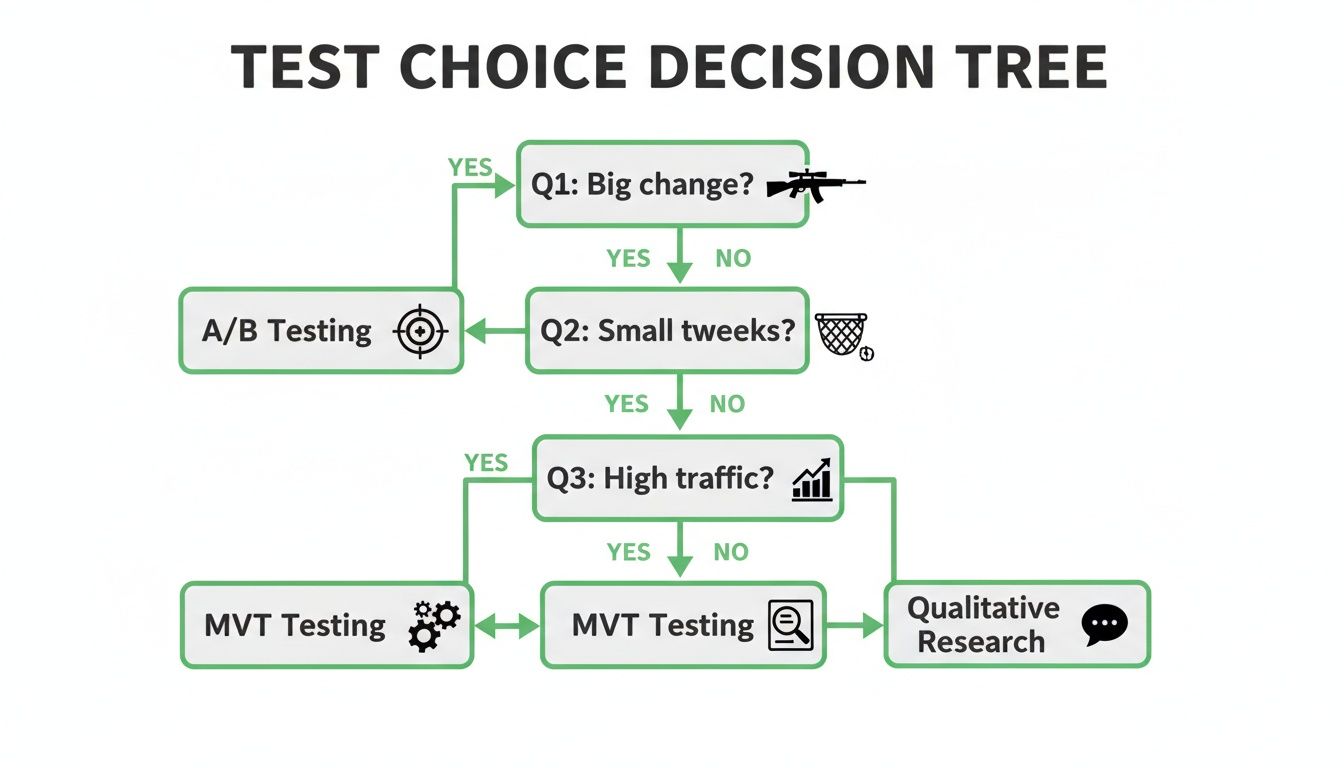

This decision tree gives a quick visual on when to choose a sniper rifle (A/B) versus a wide net (MVT).

The key takeaway is clear: your decision hinges on the scale of the change you're testing and the volume of traffic you can command.

Don't fall for false negatives

Then there's the concept of statistical power—the probability your test will actually detect a real effect if one exists. When traffic is too low, your test has low power.

This leads to a silent killer of innovation: the false negative. This is where you have a brilliant, winning idea, but your underpowered test fails to detect its positive impact. So, you kill the idea and stick with the old version, convinced your new approach was a failure.

I’ve seen teams kill potentially game-changing features because their MVT was inconclusive. The test didn’t fail; their setup did. They didn't have the traffic, and they mistook statistical noise for a lack of user interest. It's a painful and expensive mistake.

Wasting engineering and design time on a test that was doomed from the start is just burning money. Every hour spent building and monitoring an inconclusive test is an hour not spent on something that could actually move the needle. You have to be ruthless with your resources.

Part of that is setting up your analytics correctly from day one. If you're struggling with event tracking, you might be interested in our guide on how to use Google Tag Manager to get the clean data you need.

Bottom line: respect the math. If you don't have the traffic, don't run the complex test. It's that simple. Choose the right tool for your scale, or you're just gambling with your company's future.

Common testing pitfalls that waste your time

Alright, I’ve seen it all. Smart, ambitious founders making really dumb mistakes because they were impatient or read some misleading blog post from 2012. Let’s make sure that's not you.

Most testing failures don’t come from a bad hypothesis; they come from a lack of discipline. You can have the best testing idea in the world, but if you screw up the execution, you’re just wasting expensive time.

Here are the most common ways I see people sabotage their own experiments. Don't be that person.

The impatience trap: calling a test too early

This is the number one rookie mistake. You launch a test, and after two days, version B is crushing it with a 20% lift. You get excited, call the test, and roll out the new version to everyone. A week later, you check your overall conversion rate, and it’s flat.

What happened? You fell for a statistical illusion. Early results are often driven by randomness or a small segment of early adopters.

Calling a test before it reaches 95% statistical significance is pure gambling, not data-driven decision-making. You have to let the numbers mature.

It's a hard rule with no exceptions: if a test hasn't reached statistical significance, the result is meaningless. You haven't learned anything. Ending it early because it looks good is just confirmation bias in action. Be patient or don't test at all.

This also means running the test for at least one full business cycle—usually a week. User behavior on a Tuesday morning is wildly different from a Saturday night. Ignoring these weekly cycles gives you a skewed picture of reality. Run your tests for at least 7 days, preferably 14, to smooth out these fluctuations.

Mistaking motion for progress: the local maximum problem

Here’s a trap that’s harder to spot. You get addicted to the dopamine hit of small wins. You run dozens of A/B tests on button colors, minor headline tweaks, and CTA copy. You celebrate every 1.5% lift, feeling productive.

The problem? You’re stuck optimizing a local maximum. You might be perfecting the most efficient way to climb a small hill while completely missing the massive mountain right next to it. While you're testing shades of blue, a competitor could be testing a radical new onboarding flow that delivers a 10x better user experience.

- Small tweaks (the hill): Testing “Sign Up Now” vs. “Get Started Free.” This is a fine optimization, but its potential impact is capped.

- Big swings (the mountain): Testing your current signup form against a completely redesigned, interactive onboarding experience. This is a riskier bet, but the potential payoff is exponentially higher.

Don’t let the comfort of tiny, predictable wins stop you from making the bold changes that truly build category-defining products.

Focusing on vanity metrics

This one is insidious. You run a test to optimize your homepage for newsletter sign-ups. Your new version is a massive success, boosting sign-ups by 50%! You pop the champagne. 🍾

Three months later, your head of product points out that user retention from that period has plummeted. It turns out the new, aggressive pop-up drove lots of low-intent sign-ups from people who churned almost immediately. You won the battle (more sign-ups) but started losing the war (long-term customer value).

Always measure downstream impact. Look beyond the primary conversion metric and track guardrail metrics like user retention rate, activation milestones, customer lifetime value (LTV), and revenue per user.

A win that tanks your core business health is a catastrophic failure. True optimization isn’t about tricking users into clicking a button; it's about creating genuine value that keeps them coming back. Don’t fall for the easy win that costs you in the long run.

My framework for choosing the right test

Alright, enough theory. How do you actually decide which test to run when you’re in the trenches? Over the years, I've boiled it down to a simple framework that cuts through the noise. It has to be simple—we’re all moving too fast for a complicated flowchart.

It all starts with one honest question: What is the single most important metric you need to move right now?

Forget vanity metrics. Are you bleeding users and need to fix retention? Is your activation rate a total disaster? Or are you leaving money on the table and need to improve monetization? Your answer sets the entire scope of your test. Be ruthless about which fire is burning the hottest.

Start with your stage and your goal

For early-stage products or brand-new features, the answer is almost always a big, bold A/B test. You don’t know what works yet. Your job is to test wildly different ideas about your core value proposition, not waste time fiddling with button colors when your entire headline might be wrong.

Here’s how I break it down by goal:

- Activation: This is the user’s “aha!” moment. You should be testing radical onboarding flows or completely different value propositions on your homepage. The goal is a big swing, and A/B testing is the right bat for the job.

- Retention: Keeping users from churning. Test different feature introductions or completely new communication cadences. Again, A/B testing gives you a clear yes/no signal on these major changes.

- Monetization: Getting paid. Test different pricing models or fundamentally opposing checkout flows. These are foundational business questions that demand a simple, clean A/B comparison.

Only after you've found a winning direction with A/B testing should you even think about MVT. Multivariate testing is for fine-tuning a machine that's already running well, not for building the engine in the first place. This is especially true when discussing the difference between multivariate testing vs ab testing for paid campaigns, where every click has a cost.

The goal is to move from random acts of testing to a systematic engine for growth. This is about making smart, strategic bets and continuously iterating toward a better product—not just changing things for the sake of it.

Build a simple testing roadmap

A true testing culture doesn't just happen; you have to build it with intention. That means creating a dead-simple roadmap and getting your team bought in.

First, every test needs a strong hypothesis. Don't just say, "Let's test the headline." A real hypothesis has structure: "If I change the headline to be benefit-focused, then I expect sign-ups to increase by 15%, because users will better understand the immediate value." That classic "If X, then Y, because Z" format forces you to be clear about what you're doing and why.

Next, build a backlog of these hypotheses. Prioritize them based on potential impact versus the resources required. This simple process turns a chaotic list of "what ifs" into an organized growth strategy. It shows your team that testing isn't just about finding winners; it's about learning in a structured way.

If you want to dive deeper into how this applies to paid ads, we have a guide on effective PPC advertising strategies that can give you more ideas.

This isn’t about adding bureaucracy. It’s about creating focus. It’s how you make sure every single experiment—win or lose—moves your business forward.

Frequently asked questions

Alright, let's tackle the questions I hear all the time from other founders and PPC managers. No fluff, just direct answers to help you stop wondering and start testing. Analysis paralysis is a real business killer, so let’s clear these up.

How long should I run an A/B test?

Honestly, the answer "it depends" is annoying but true. So let's reframe it. The goal is to run a test long enough to capture a full business cycle and hit statistical significance. Anything less is just guessing.

- Minimum time: Give it at least one full week, but preferably two. User behavior on a Monday morning is wildly different from a Saturday night. You need to capture that full spectrum to avoid making decisions on skewed data.

- Statistical significance: Don't even think about stopping the test until you hit at least 95% statistical significance. Ending it early because one version is "winning" after two days is a classic rookie mistake that leads to terrible long-term decisions.

- Conversions per variation: As a rough guideline, aim for a minimum of 300-500 conversions per variation. If you're nowhere near that after a few weeks, your traffic is probably too low for that specific test, and you might need to test bigger, more dramatic changes.

What are the best testing tools for startups?

Forget the massive, enterprise-level suites that cost a fortune and take a quarter to implement. As a startup, you need something fast, affordable, and easy to get going.

For most people, the best place to start was Google Optimize, which is now being integrated into Google Analytics 4. It’s free, powerful enough for most A/B tests, and plugs directly into your analytics data.

If you have a bit more budget and need more advanced features, tools like VWO or Optimizely are solid choices. The key is to pick one and actually use it. A simple tool you use is infinitely better than an expensive one you don't.

Can I run multiple tests at the same time?

Yes, but you have to be smart about it. You can absolutely run multiple tests on different pages at the same time without any issues. Testing your homepage headline and your pricing page layout simultaneously is perfectly fine because the user groups don't overlap in a way that messes with the results.

Where you get into trouble is running two different tests on the same page at the same time.

If you're A/B testing a headline and also running a multivariate test on the images and CTAs on that exact same page, your data will be a complete mess. You'll have no idea which change actually caused which effect. It's a recipe for confusion and bad data.

Ready to stop guessing and start scaling your Google Ads with AI-powered landing pages and Auto A/B testing? dynares builds thousands of high-intent pages and ads for you, then automatically finds the winners. See how it works.

Create reusable, modular page layouts that adapt to each keyword. Consistent, branded, scalable.

From ad strategy breakdowns to AI-first marketing playbooks—our blog gives you the frameworks, tactics, and ideas you need to win more with less spend.

Discover Blogour platform to drive data-backed decisions.