A No-Nonsense Guide to Split Testing Landing Pages

A No-Nonsense Guide to Split Testing Landing Pages

Here's the deal: sending paid traffic to a landing page you haven't tested is the same as lighting your marketing budget on fire. I'm not being dramatic. Split testing landing pages is just the simple, systematic process of comparing different versions of a page to find out which one actually makes you money.

It’s the critical difference between guessing what works and knowing what gets people to convert.

Stop burning cash on untested landing pages

I see this constantly with founders and marketing teams across Europe. They'll spend weeks, sometimes months, perfecting their ad copy, keyword strategy, and audience targeting for a big new PPC campaign. They obsess over every tiny detail of the ad itself, and then... they link it to a landing page that was designed based on a gut feeling and a prayer.

It's madness. And honestly, it's a bit dumb.

This isn’t some minor oversight. It’s the single biggest reason most paid campaigns bleed cash and fail to deliver a positive return. The ad isn't the finish line—it's just the starting pistol. The landing page is where the race is actually won or lost.

The massive cost of "good enough"

Picture this: you launch a landing page for your new Google Ads campaign. You’re pouring your budget into high-intent keywords, but conversions are just sitting there, hovering around the industry average of 6.6%. That's the sad reality for most unoptimized pages.

But here’s the kicker: I’ve seen optimized variants routinely hit 12-15% or even higher. That’s not a small tweak; it’s a complete game-changer for your unit economics. This isn't just theory—it’s the hard-won lesson from my own ventures. The moment we shifted from creative guesswork to a systematic process of split testing, it directly changed our bottom line.

The ultimate goal of split testing is to improve your landing page performance and learn how to increase website conversions effectively. It's about building a data-driven culture that values evidence over ego.

Why data-friven testing is non-negotiable

A culture of continuous testing is the only sustainable way to scale a business in 2024. It yanks you out of subjective debates in a meeting room and forces you to look at objective data that speaks for itself. This isn't just about tweaking button colors; it's about digging into user psychology.

- Financial Imperative: Every single failed conversion on an untested page is wasted ad spend. Doubling your conversion rate means you can either slash your customer acquisition cost in half or double your lead volume for the exact same budget. The math is simple and brutal.

- Algorithmic Advantage: Platforms like Google Ads reward high-converting pages with better Quality Scores. This directly cuts your cost-per-click (CPC) and juices your Return on Ad Spend (ROAS).

- Compounding Knowledge: Every test, whether it wins or loses, teaches you something valuable about your customer. These insights are assets that inform your future marketing, product development, and overall strategy.

This guide isn’t some fuzzy marketing theory. It’s a practical playbook for building a rigorous process for landing page conversion rate optimization so you can stop leaving money on the table.

How to build a killer test hypothesis

Let’s get one thing straight: a test without a solid hypothesis is just a guess. It’s a complete waste of your time, your team’s energy, and your ad budget.

If you're just throwing spaghetti at the wall to see what sticks, you're not optimizing—you're just making noise. This is where amateurs get stuck.

Running split testing for landing pages without a clear 'why' is the fastest way to get inconclusive results and a whole lot of frustration. A strong hypothesis isn’t a formality; it’s the strategic backbone of your test. It forces you to think critically about why a change might actually work, turning random tactics into a systematic process for learning what your customers care about.

This is the thinking that separates pros who deliver consistent wins from those who get lucky once in a while.

Moving beyond button colors

Please, can we stop obsessing over button colors? 😅

Unless your current call-to-action is practically invisible, changing it from blue to green is not going to be the breakthrough you're looking for. These are low-impact tweaks that almost never move the needle in a meaningful way.

A great hypothesis is never about the element itself; it’s about the user psychology behind it. The goal isn't just to find a winner, but to understand why it won. That’s how you build a real competitive advantage.

True optimization comes from focusing on the big levers—the core drivers of conversion that can produce a real lift. Think bigger.

Here's a quick reference to help you prioritize. Focus your energy on the left column first.

High-impact vs. low-impact testing elements

High-Impact Elements (Big Wins)Low-Impact Elements (Minor Tweaks)Headline & Value Proposition: The first 5 seconds on the page.Button Color: Unless it's a usability nightmare.Hero Section Layout: Image, form, and headline combination.Minor Font Changes: Small tweaks to size or style.Offer & Call-to-Action: What you're asking for and how you ask.Iconography: Subtle changes to visual elements.Social Proof: Testimonials, logos, case studies (and their placement).Word-smithing Body Copy: Changing a few words here and there.Form Length & Fields: Reducing friction is a huge win.Footer Links: Important for trust, but rarely a conversion driver.Page Layout & Flow: The overall structure and narrative of the page.Image Variations: Unless the original is completely irrelevant.

Instead of asking, 'What if we change the button to red?' a much better question is, 'What if we clarify our headline to explicitly state the main benefit, because we believe users aren't grasping our value proposition quickly enough?'

See the difference? One is a random shot in the dark; the other is a strategic inquiry rooted in a problem.

The hypothesis framework that forces clarity

To build a powerful hypothesis, you need a structure that forces you to justify why you're even running the test. I use a simple but incredibly effective framework: If I change [X], then [Y] will happen, because of [Z].

A weak hypothesis sounds like this: 'Let's test a new headline.'

A killer hypothesis sounds like this: 'If I change the headline to focus on time saved instead of features, then lead form submissions will increase, because our user feedback shows that busy professionals are our core audience and their biggest pain point is lack of time.'

This structure forces you to connect a specific action to a measurable result and, most importantly, a psychological reason. It gives you a clear lens for analysis after the test is over. You're not just looking for a winner; you're trying to validate (or invalidate) an assumption you have about your customers.

For those looking to go deeper on different testing methods, our guide on multivariate testing versus A/B testing is a great next step. But no matter the method, this framework is your best defense against wasting traffic on pointless tests.

Designing and launching your split test

Alright, theory is great, but execution is everything. You've got a killer hypothesis, and now it's time to actually get the test live without messing it all up. This is where the rubber meets the road—the operational side of split testing landing pages that separates a clean, trustworthy result from a messy, inconclusive waste of traffic.

Let’s be direct: this is the part where small mistakes can completely invalidate your results. So, pay attention. The goal here is to set up a test that is clean, technically sound, and gives you data you can actually trust to make business decisions.

A/B vs. multivariate: the practical choice

First things first, you'll hear people talk about A/B testing and multivariate testing (MVT).

A/B testing is simple: you test one version of a page (your control, A) against another version (your variant, B). MVT is where you test multiple changes at once—say, three different headlines and two different images in every possible combination.

For 99% of businesses, especially when you're just getting started, MVT is a terrible idea. It requires a massive amount of traffic to test all the combinations and reach statistical significance.

Stick with A/B testing. It's faster, cleaner, and gives you clearer insights. Win a test, make the winner the new control, and move on to the next test. Simple.

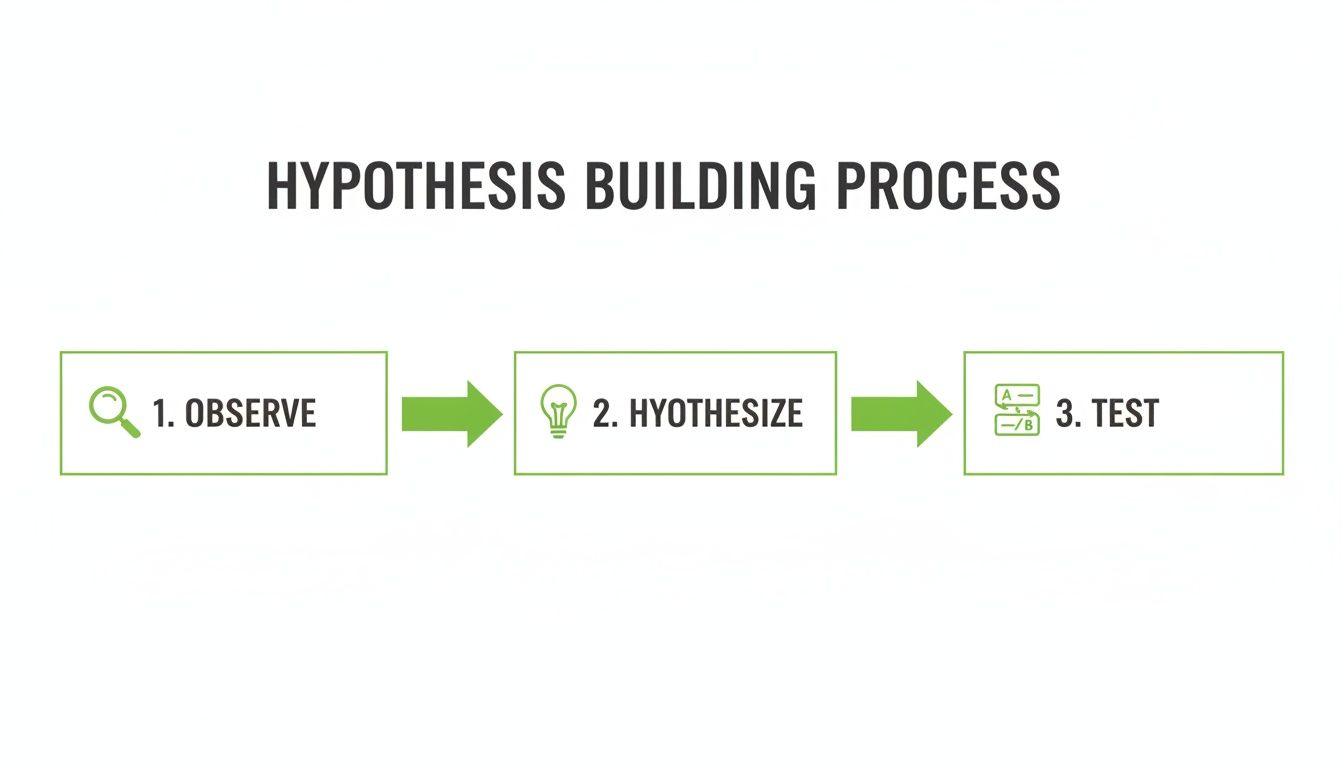

This process of observing user behavior, forming a testable hypothesis, and then executing the test is the fundamental loop of optimization.

This simple flow keeps your efforts focused and ensures that every test you run is grounded in a strategic reason, not just random guessing.

Determining sample size and test duration

One of the most common and damaging mistakes I see is ending a test too early. You run it for a weekend, see one version pulling ahead by a few conversions, and declare a winner.

This is a recipe for disaster. You need to let a test run long enough to achieve two things: sufficient sample size and full business cycles. User behavior on a Monday morning is different from a Saturday night. You must run your test long enough to capture these natural fluctuations. I recommend running a test for at least two full business weeks to smooth out any daily anomalies. You also need enough conversions on each variant to know the result isn't just random luck. A dozen conversions mean nothing. Aim for at least 100-200 conversions per variant as a bare minimum.

Don't be impatient. Let the data mature. Making a major business decision based on a statistically insignificant result is worse than not testing at all.

The technical setup: tracking goals correctly

This is where things can get messy if you're not careful. You need to ensure your testing tool is properly integrated with your analytics and ad platforms to track the right goals. For most of us in the lead gen space, this means connecting with Google Ads, Google Tag Manager (GTM), and a CRM like HubSpot.

The goal is to cleanly split your traffic and accurately attribute conversions. Your testing tool will typically handle the traffic split, randomly assigning visitors to either the control or the variant page. The tricky part is the tracking.

Using Google Tag Manager is the cleanest way to manage this. You can fire a single conversion event tag when a user completes the desired action (like a form submission), and your testing tool, Google Analytics, and Google Ads can all listen for that same event. This prevents data discrepancies and keeps your setup organized.

For SMB founders and agencies juggling Google Ads at scale, this translates to real dollars. For instance, directing paid traffic to A/B-validated pages improves ROI by ensuring every click converts, often 2-3x better than control variants. With 95% statistical confidence as the gold standard (only a 5% chance of randomness), PPC pros can confidently scale winners, lowering wasted spend and fueling revenue optimization via Google Ads integrations.

Avoiding common technical pitfalls

Finally, a few technical gremlins can ruin your test. Be on the lookout for these.

One of the most annoying is the 'flicker effect', or Flash of Original Content (FOOC). This happens when the original page loads for a split second before the testing software swaps in the variant content. It's a jarring user experience and can absolutely tank your conversion rates and pollute your data. Most modern testing tools have asynchronous scripts to mitigate this, but always test it yourself.

Another critical factor is page speed. Your testing script adds a bit of overhead. If your variant page is loaded with heavy images or complex scripts, it could load slower than the control. This alone can cause it to lose, even if the content is better. Always run your test pages through a speed tool to ensure you're comparing apples to apples. If you need a refresher on the technicals, you can check out our guide on how to use Google Tag Manager.

Analyzing results and avoiding dumb mistakes

Alright, your test is live and data is trickling in. This is the moment of truth.

But let me be blunt: getting the data is the easy part. The hard part is interpreting it correctly without letting your biases and impatience screw everything up. This is where most people fail.

The single biggest mistake you can make? Ending a test too early. You see one variant pulling ahead by five conversions after two days and your gut screams to declare a winner. Stop. That’s not data; it’s noise. Patience here isn't just a virtue; it's your greatest asset for getting results you can actually trust.

Statistical significance is your only north star

The entire point of running a controlled test is to make sure your results aren't just a random fluke. That’s what statistical significance tells you. A 95% confidence level is the industry standard for a reason—it means there's only a 5% probability that the difference you're seeing happened by pure luck.

Think about it. If you call a test at 80% significance, you have a one-in-five chance of being completely wrong. Would you bet a chunk of your ad budget on those odds? I certainly wouldn't. Don’t get emotional about it. Just wait for the numbers to mature.

The goal isn't just to find a winner. It's to find a true winner. Ending a test prematurely based on a small sample size is the marketing equivalent of building your house on sand. It will collapse, and you'll be left wondering why your 'winning' page is suddenly tanking.

Look beyond the primary conversion rate

Winning a test isn't always as simple as seeing which variant got more form submissions. You have to look at the whole picture to understand the why behind the numbers. A great split test gives you insights far beyond a single metric. To get a complete picture, you need to analyze these secondary metrics: bounce rate, time on page, and downstream funnel metrics. A higher conversion rate paired with a much higher bounce rate might signal a problem. A jump in time on page can indicate that your new messaging is resonating more deeply. And most importantly, did the leads from Variant B actually turn into qualified opportunities or customers at a higher rate? It's all too easy to get more cheap leads that are lower quality. Don't optimize for vanity metrics.

Segmentation is where the gold is hidden

Analyzing your test results in aggregate is just scratching the surface. The real, game-changing insights come from segmentation. How did your test perform for different slices of your audience? This is how you turn a simple test into a deep learning opportunity.

- Mobile vs. Desktop: Did your slick new layout crush it on mobile but actually perform worse on desktop? This tells you a ton about user context and device-specific behavior.

- New vs. Returning Visitors: Maybe your bold new value proposition works wonders on fresh traffic but alienates your existing audience who were used to the old messaging.

- Traffic Source: How did visitors from your Google Ads campaign behave compared to those from your LinkedIn ads? Different sources bring different levels of intent and awareness.

Segmentation transforms a simple 'A beat B' conclusion into a much richer story. You go from a flat statement to something like, 'A beat B, but only for mobile users from our brand campaign, telling us we need a different approach for desktop prospecting.' Now that’s a powerful insight.

If you want to dive deeper into the data that really matters, you should explore our guide on key metrics and reports for marketing analysis.

What to do when a test fails

Sometimes, your brilliant hypothesis falls flat. The test is inconclusive, or your variant straight-up loses to the original. Don't get discouraged. This isn't a failure; it's just more data.

Learning what doesn't work is just as valuable as learning what does, because it saves you from making a bad decision at scale. When a test is inconclusive, it usually means your change wasn't impactful enough to matter. It's time to go back to the drawing board and formulate a bolder hypothesis. The journey of optimization is a marathon, not a sprint.

Let's be honest. Everything we've covered so far—building a solid hypothesis, designing a clean test, and digging into the results—is powerful stuff. But it's also manual, slow, and eats up resources.

If you're running a growing business or an agency with hundreds (or thousands) of keywords, that manual process just falls apart. You can't possibly build and test a unique landing page for every single ad group. It's just not feasible.

This is where technology steps in and gives you an almost unfair advantage. The future of split testing landing pages isn't about running one perfect test a month. It's about running thousands of micro-tests every single day, automatically.

From manual A/B tests to AI-driven optimization

The traditional way of split testing is linear and clunky. You build page A, then you build page B. You run the test for weeks, analyze the data, implement the winner, and then you start the whole cycle over again. It’s a huge amount of effort just to get one insight.

Now, picture a totally different way of working.

What if you could automatically spin up a unique, high-intent landing page for every single keyword in your campaign? And what if that system could then constantly test tiny variations of the headline, copy, layout, and call-to-action in real time?

This is the core idea behind continuous optimization. It's a machine that never stops learning. The system intelligently sends a small slice of traffic to new variants. As soon as a winning version starts to pull ahead, it gets more traffic, while the losers are quietly phased out. The winner from today’s test becomes the control for tomorrow’s. This creates a relentless, compounding lift in performance that is simply impossible to achieve manually.

This isn't some far-off sci-fi idea. This is exactly what AI-driven platforms like dynares are doing right now. It takes the fundamental principles of split testing and puts them on steroids, turning a manual chore into a self-improving system.

Connecting optimization directly to revenue

This is where it gets really powerful. The end goal isn't just to get more conversions; it's to drive more revenue. A truly smart system doesn't just stop at tracking a form submission. It needs to know the value of that conversion.

This is where the feedback loop becomes critical. By integrating with your CRM and feeding conversion value data back into Google Ads, the system learns which keywords and page variations aren't just generating leads, but are generating high-value customers. This transforms your entire PPC effort from a simple lead generation channel into a true revenue optimization engine. The system starts prioritizing traffic and optimizing pages for keywords that lead to actual sales, not just form fills.

The new competitive edge

For agencies and businesses managing large-scale campaigns, this automated approach isn't a luxury anymore—it's fast becoming a necessity. The old method of pointing an entire campaign to one generic landing page is just dumb. It’s lazy, and it leaves a ton of money on the table.

The new standard is hyper-personalization at scale. Your landing page headline and copy perfectly mirror the ad and the keyword that brought the visitor there. Every click is a data point that makes the system smarter. Your team is freed from the endless cycle of building and testing pages to focus on higher-level strategy.

This isn't about replacing marketers. It's about giving them technology that handles the tedious, repetitive work, letting them focus on what humans do best: thinking strategically. The future is about building self-improving systems, and that’s a very exciting place to be.

Frequently asked questions

Look, I get it. When you're deep in the weeds of building and scaling, you just want straight answers. Here are the most common questions I get about split testing landing pages, with no fluff attached.

How long should I run a split test?

There's no magic number. Anyone who tells you to run every test for 'one week' is oversimplifying things. The real answer depends on two critical factors: getting enough data and covering a full business cycle.

First, you need a large enough sample size to trust the results—specifically, enough conversions on each variant. I aim for at least 100-200 conversions per version as an absolute minimum. More is always better.

Even more important, you have to let the test run long enough to account for the natural swings in user behavior. A test that only runs over a weekend is useless because you'll miss the completely different intent of weekday traffic. My rule of thumb? Run a test for at least two full business weeks. This smooths out daily fluctuations and gives you enough time to gather the data needed to hit 95% statistical confidence. Don't be impatient.

What if my split test shows no clear winner?

An inconclusive test isn't a failure; it’s a result. It’s valuable data that tells you something important: the change you made didn't have a meaningful impact on user behavior. This is a good thing! It stops you from rolling out a change based on a gut feeling and potentially making things worse.

Usually, a 'no winner' result happens because your change was too subtle or your hypothesis was simply wrong. The solution? Go back to the drawing board. Dig into the data to see if any specific segments tell a different story. Then, formulate a new, bolder hypothesis that tests a more fundamental element, like your core value proposition or the entire page layout.

Can I test more than two pages at once?

Yes, you absolutely can. This is often called an A/B/n test or, in more complex scenarios, a multivariate test. But—and this is a big but—you need to be extremely careful here. Every new variation you add slices your traffic into smaller and smaller pieces. If you have a lower-traffic page and you split that traffic four or five ways, your test will take an eternity to reach statistical significance. For most businesses, it’s a massive waste of time.

For most of us, a more effective strategy is to run a series of simple A/B tests. Test your control (A) against a challenger (B). If B wins, it becomes the new control. Then, you test the new control against your next challenger (C). This iterative, sequential approach is faster, cleaner, and delivers more actionable insights along the way. Stick to the basics until you have the massive traffic volumes to justify more complex experiments.

Ready to stop the manual grind and automate your way to higher conversions? dynares uses AI to build, test, and optimize a high-intent landing page for every single keyword, turning your campaigns into a self-improving revenue engine. See how it works.

Create reusable, modular page layouts that adapt to each keyword. Consistent, branded, scalable.

From ad strategy breakdowns to AI-first marketing playbooks—our blog gives you the frameworks, tactics, and ideas you need to win more with less spend.

Discover Blogour platform to drive data-backed decisions.